Check Point Research has uncovered VoidLink, a sophisticated, modular malware framework almost entirely authored by artificial intelligence.

The researchers first documented VoidLink last week, but today they have published a new report portraying it as the first convincingly documented case of high-complexity, AI-generated malware. While previous examples of AI-assisted malware, such as those linked to amateur threat groups like FunkSec, largely mimicked existing tools, VoidLink was built as a comprehensive platform from the ground up. Operational security mistakes by its developer exposed internal planning materials, code artifacts, and detailed timelines, offering an unusually transparent look into how a single actor leveraged AI to develop enterprise-grade malware in just under a week.

The investigative team at Check Point tracked VoidLink’s development from its earliest stages. Initial assessments of the malware’s use of eBPF, LKM rootkits, and cloud-centric modules led researchers to suspect the involvement of an advanced, organized threat actor. However, inconsistencies in development speed versus the documented timeline led to a startling conclusion: the framework had been generated and managed by AI, not a coordinated team.

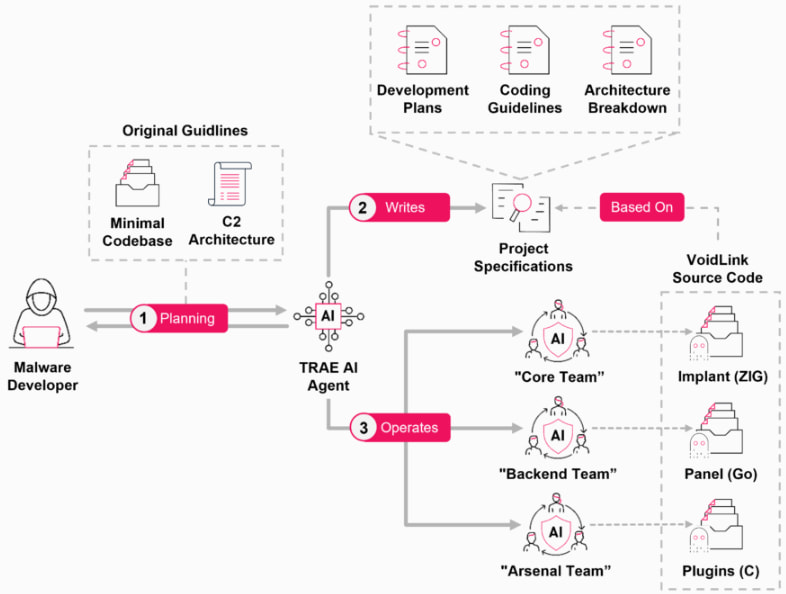

VoidLink’s architecture reflects a sophisticated approach to development, following a method called Spec Driven Development (SDD). Rather than directly tasking the AI with producing malware, the developer, believed to be a single individual, first directed the AI to generate a complete development roadmap. This included multi-team sprint plans, modular design documentation, risk assessments, and deployment guides. These structured plans, written in Chinese and organized in Markdown files, were then used as blueprints to guide AI agents through the implementation process.

Check Point Research

The malware’s development likely began in late November 2025 using TRAE SOLO, a specialized AI assistant integrated into the TRAE integrated development environment (IDE). Leaked documents reveal that the initial AI prompts were carefully crafted to avoid triggering model safety constraints, steering the assistant toward high-level planning instead of direct malicious code generation. This allowed the developer to construct VoidLink in stages, with the AI handling nearly all coding tasks while the human operator acted as a coordinator and tester.

Checkpoint’s researchers found that the planning documents divided work across three “virtual” teams: the Core Team (using Zig), the Arsenal Team (C), and the Backend Team (Go). Each team had distinct responsibilities, such as module development, payload design, and backend infrastructure. Despite being fictional, the sprint planning and adherence to coding standards created the illusion of a large-scale engineering effort. Code analysis showed a nearly perfect match between the specifications and the resulting source code, over 88,000 lines by the end of the first week.

In its current form, VoidLink includes modules for cloud reconnaissance, lateral movement in containerized environments, and stealth mechanisms using Linux kernel-level manipulation. Command-and-control infrastructure has already been established, and compiled samples have surfaced on platforms like VirusTotal, indicating real-world deployment or testing.

If you liked this article, be sure to follow us on X/Twitter and also LinkedIn for more exclusive content.