As enterprises rush to integrate AI assistants into daily workflows, a new and potentially overlooked attack surface is emerging: Model Context Protocol (MCP) servers.

Built to connect AI applications to external tools and data, MCP servers can be exploited to execute code, exfiltrate data and manipulate users — often without visible signs of compromise.

Attackers “… can leverage these [AI] integration points for code execution, data exfiltration, and social engineering — often without any indication to the user that an attack has occurred,” said Praetorian researchers.

Breaking Down MCP Security Risks

The open-source Model Context Protocol (MCP) was introduced to standardize how large language models (LLMs) connect to external tools and enterprise systems.

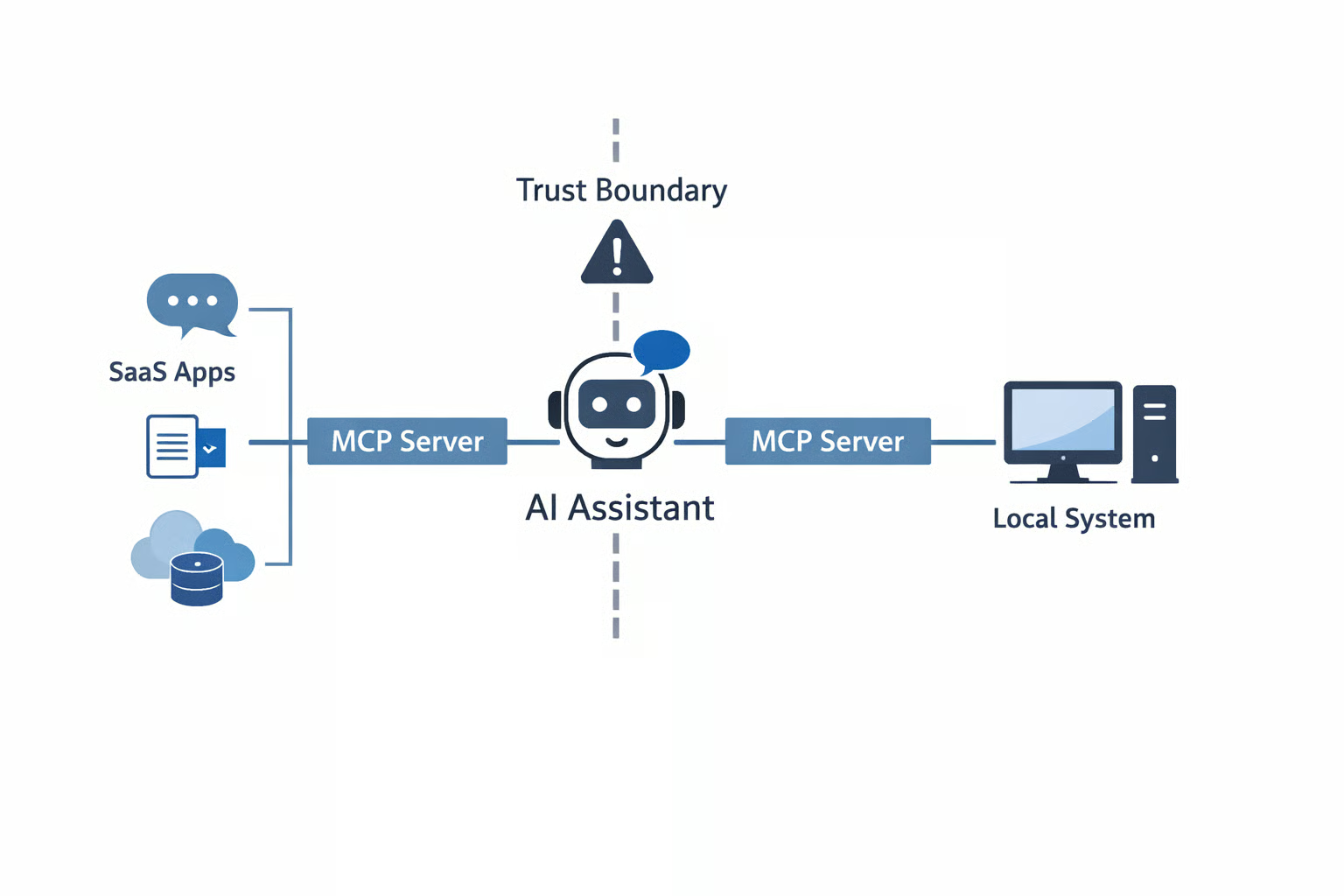

By design, it enables AI assistants to retrieve data, trigger workflows and interact with SaaS platforms in real time.

While this interoperability is powerful, it also inserts MCP servers directly into the trust boundary of AI-driven workflows — effectively creating a machine-in-the-middle layer between the model and the systems it can influence.

MCP servers typically operate in two configurations, each with distinct risk implications.

Locally hosted MCP servers run as processes on a user’s machine, often with the same privileges as the user.

In this position, they can execute arbitrary code, access local files and credentials, collect system information and establish persistence mechanisms.

Remotely hosted MCP servers — such as those integrating with Slack, Notion, Box or Atlassian — cannot directly execute local code, but they can access enterprise data, perform actions inside SaaS platforms and initiate OAuth flows that may expose credentials.

When a malicious local MCP server is chained with a legitimate remote MCP server, the combined access to enterprise data and local execution capabilities can create an effective exploitation path.

How MCP Tools Create Zero-Click Risk

At the core of MCP-related risk is the concept of tools, which allow LLMs to interact with external systems.

For example, the official Slack MCP server exposes read, write and delete capabilities that can be configured with approval levels such as “Always allow,” “Needs approval” or “Blocked.”

While read-only tools often appear low risk and are frequently granted automatic approval, they can become zero-click attack vectors when chained with malicious MCP components that process their outputs.

MCP Server Chaining and Code Execution

Researchers demonstrated this risk using a proof-of-concept malicious MCP server called conversation_assistant, designed to resemble a benign productivity utility.

In one scenario, an attacker embedded base64-encoded commands inside Slack messages.

When a user asked the AI assistant to retrieve and analyze Slack messages using the trusted Slack MCP server, those messages — including the encoded payload — were returned to the model.

The AI then passed the content to the malicious MCP server’s tools as part of its analysis workflow.

The malicious server decoded the payload and executed it locally, launching system applications in the background while the user saw only legitimate analytical output.

This attack highlights how trusted data sources can become unintended command-delivery channels when AI tools are chained together.

The user interface provided no visible indication that code execution had occurred.

Beyond command execution, MCP servers can also enable large-scale data exfiltration.

Because tools receive full context as input, a malicious MCP server can capture complete datasets — such as Slack conversations or document content — and forward them to attacker-controlled infrastructure.

In the demonstration, exfiltrated Slack messages were uploaded as JSON files to an attacker’s Slack workspace using a hardcoded bot token.

Supply Chain and Response Injection Risks

The attack surface extends beyond tool chaining. The MCP ecosystem relies on uvx, part of Astral’s UV package manager, to load Python-based MCP servers.

When an MCP client starts, it dynamically downloads and executes referenced packages from PyPI.

This introduces supply chain risks, including typosquatting, package compromise through credential theft or CI/CD abuse, and revival hijacking of abandoned package names.

Unlike interactive MCP chaining attacks, these supply chain vectors require no user interaction.

Malicious code executes during agent startup — before any MCP tools are invoked — bypassing tool approval mechanisms entirely.

Researchers also demonstrated response injection techniques, embedding malicious shortened URLs and false support instructions into otherwise accurate AI responses.

Because the content appears to originate from a trusted assistant, users may be more susceptible to credential harvesting, phishing or other social engineering attacks.

Protecting AI Integration Layers

As MCP adoption expands, organizations should approach these integrations with the same rigor applied to other enterprise infrastructure components.

Because MCP servers can execute code, access sensitive data and influence system behavior, defensive measures must extend beyond basic configuration.

A layered approach that combines governance, technical controls and monitoring is essential to reducing risk.

- Implement a formal review and approval process for all MCP server installations and treat them as potentially untrusted executable code.

- Minimize tool permissions by avoiding “always allow” settings, restricting OAuth scopes to least privilege and requiring human approval for high-risk actions such as file downloads or local command execution.

- Isolate locally hosted MCP servers in sandboxed environments or containers with restricted user privileges and enforce strict outbound network controls to limit data exfiltration.

- Enable comprehensive logging of MCP tool invocations and data flows, and integrate telemetry into SIEM and monitoring platforms to detect anomalous tool chaining, bulk data retrieval or unusual process launches.

- Strengthen supply chain defenses by pinning package versions, validating package sources, using software composition analysis (SCA) tools and integrating MCP configurations into CI/CD security reviews.

- Educate users and administrators about the risks of chained tool calls and AI-driven integrations, and continuously audit configurations for unnecessary integrations or excessive permissions.

- Test incident response plans to account for MCP-based attacks, including code execution, data exfiltration and supply chain compromise scenarios.

Together, these measures can help organizations reduce the likelihood of MCP-based compromise while improving visibility, containment and recovery capabilities across AI-integrated environments.

AI Integrations and Trust Boundaries

As AI assistants become more deeply embedded in enterprise systems, MCP servers represent a new convergence point between application logic, SaaS integrations and endpoint execution.

The same interoperability that enables productivity gains also weakens traditional trust boundaries, creating opportunities for code execution, data exfiltration and social engineering if not properly governed.

For security teams, it’s important to treat AI integration layers as core infrastructure rather than just auxiliary tooling.

AI is prompting organizations to leverage zero-trust solutions that continuously verify identities, restrict implicit trust and segment access across AI-driven integrations and enterprise systems.