Generative AI and multi-agent autonomous systems are transforming the enterprise IT stack, promising breakthroughs in efficiency, customer experience, and innovation. Yet, without disciplined, secure-by-design strategies embedded from the start, organizations risk data breaches, unauthorized agent behavior, compliance failures, and erosion of trust.

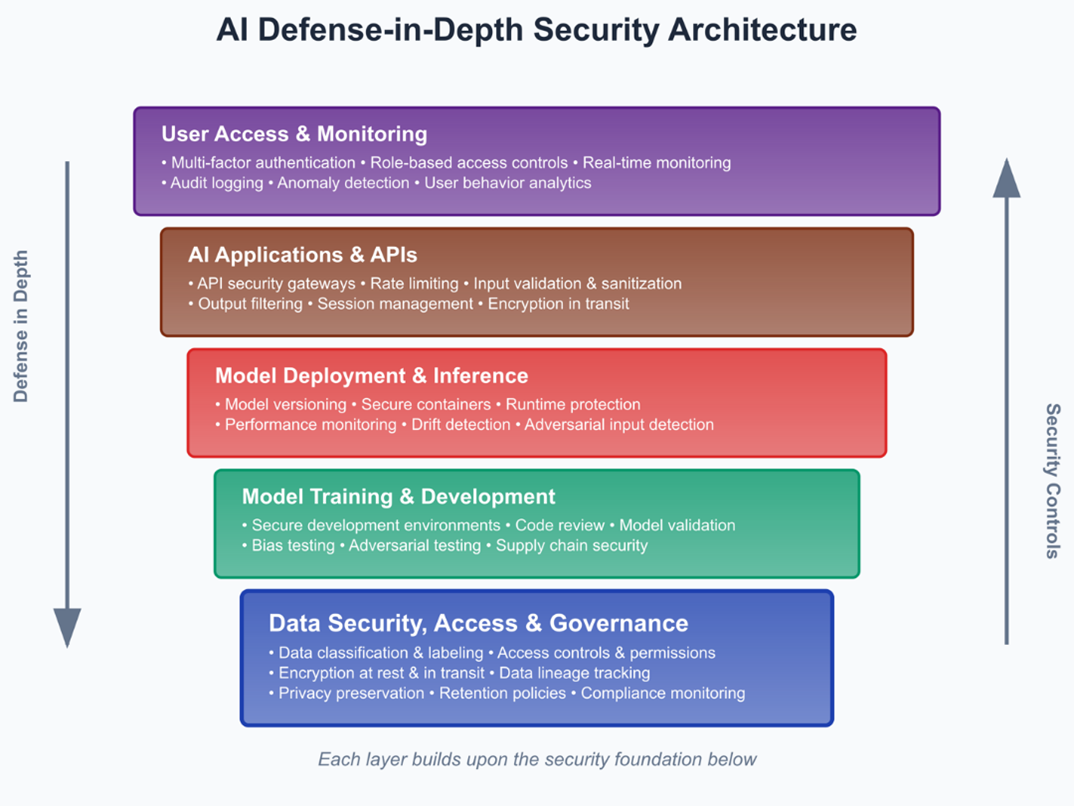

The good news? Defense-in-depth, zero-trust principles, and strong governance still apply, and they can be extended to protect even the most complex AI architectures. By building on proven foundations and adapting them for AI’s unique challenges, enterprises can not only mitigate risk but also unlock competitive advantage.

From Familiar Foundations to AI-Specific Safeguards

Enterprise AI systems inherit much from traditional security domains—data integrity, identity management, and network segmentation—but introduce new considerations such as adversarial attacks, data poisoning, and model theft. Extending established controls to meet these challenges is both possible and necessary. Adversarial robustness, for example, requires organizations to implement input validation, conduct continuous model testing, and run adversarial scenario simulations to identify vulnerabilities before they can be exploited. Similarly, maintaining data integrity demands applying mature governance and lineage tracking practices to training data, while adding AI-specific monitoring to detect poisoning attempts.

The protection of intellectual property is another critical area. AI models should be treated as high-value business assets, with measures such as encryption of model parameters, strict access controls, and the safeguarding of training methodologies. These approaches are further strengthened when paired with technical safeguards like confidential computing for regulated data, signed tokens for secure tool calls, and AI-specific monitoring tools that can detect unusual model behavior.

Secure-by-Design in the AI Stack

Security for AI must begin at the architecture level, integrating authentication, least-privilege access, and threat mitigation into model design, data handling, and agent orchestration from day one. The Model Context Protocol (MCP), now widely used by providers like OpenAI and Google, enables secure, context-aware interactions between agents and data sources. However, MCP also brings potential risks, including prompt injection, tool spoofing, and credential misuse. Enterprises can address these risks by using only trusted MCP servers, enforcing cryptographically signed tokens, auditing context metadata, and monitoring tool access for any irregularities.

Multi-agent systems present systemic risks such as miscoordination and “tool squatting,” where malicious tools impersonate legitimate ones. Zero-trust registries and unified orchestration hubs can help maintain governance and observability across all agent workflows, ensuring both security and operational efficiency.

Human-Agent Hybrid Teams

Unchecked AI autonomy increases risk, making it vital to blend human oversight with agent execution. The most resilient systems are designed so that humans retain control over critical tasks such as final approvals, anomaly reviews, and escalations. This ensures that while agents can operate at scale, decision-making for high-impact scenarios remains in human hands.

In Security Operations Centers, for instance, agents can handle Level 1 and Level 2 work, such as enrichment and triage, allowing senior analysts to focus on strategic threat hunting. Similar approaches in sales, procurement, and customer service can enable faster operations while preserving accountability and transparency. By integrating “kill-switch” mechanisms, gate control audits, and workflows where agents flag uncertain outcomes for human review, organizations can reduce risk while enhancing oversight.

Aligning Security with Measurable Impact

Technical safeguards deliver their full value only when they are tied directly to business outcomes. Successful AI programs identify ROI-driven use cases, such as automating pricing governance, accelerating threat detection, or streamlining sales processes, and establish metrics to measure success. These might include the percentage reduction in manual processes, improvements in detection and response times, or measurable revenue and cost-efficiency gains.

Maintaining alignment between technical performance and business value requires ongoing cross-functional governance. Security, machine learning operations, compliance, and business units must collaborate continuously to ensure that efficiency gains persist, systems remain compliant, and AI deployments continue to meet organizational objectives.

The Time to Start is Now

Enterprises can take the first step by piloting secure multi-agent systems in controlled environments, applying threat modeling from the outset, and developing reusable, industry-specific agent libraries. Establishing secure-by-design principles early in the AI lifecycle, building cross-functional governance structures, and incorporating human oversight into all critical workflows are key to long-term success. Partnering with specialized firms can accelerate adoption while ensuring operational resilience, regulatory compliance, and stakeholder trust.

The organizations that embed AI security into their DNA today will be the ones leading tomorrow, deploying advanced AI capabilities with confidence, adapting to evolving regulations, and building a lasting competitive advantage.

About the Author

Alison Andrews is the Managing Director at MorganFranklin Cyber, where she leads the firm’s Artificial Intelligence practice. With over 20 years of experience in AI, cybersecurity, and cloud technology, Alison previously served as Global Director at Google Cloud, driving innovative solutions using generative AI. She also founded Vigilant, a security services provider acquired by Deloitte. At MorganFranklin, Alison focuses on developing secure, enterprise-ready AI systems that deliver measurable value and drive transformation for clients.

Alison Andrews is the Managing Director at MorganFranklin Cyber, where she leads the firm’s Artificial Intelligence practice. With over 20 years of experience in AI, cybersecurity, and cloud technology, Alison previously served as Global Director at Google Cloud, driving innovative solutions using generative AI. She also founded Vigilant, a security services provider acquired by Deloitte. At MorganFranklin, Alison focuses on developing secure, enterprise-ready AI systems that deliver measurable value and drive transformation for clients.