editorially independent. We may make money when you click on links

to our partners.

Learn More

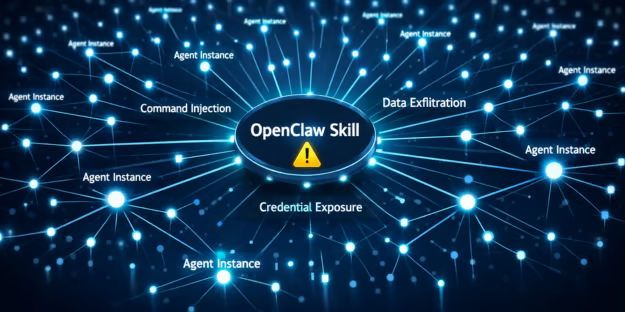

As AI agents become more widely adopted, new research is highlighting security gaps within their supporting ecosystems.

A large-scale audit of the OpenClaw skill registry by ClawSecure found that 41.7% of widely used skills contain substantive vulnerabilities, including issues such as command injection and credential exposure.

“We audited 2,890+ of the most popular OpenClaw skills and found that over 41% have substantive security vulnerabilities,” said J.D. Salbego, founder of ClawSecure in an email to eSecurityPlanet.

He added, “The core problem is that a single scan at install time isn’t enough. These agents run locally with full system access and the code can change at any time.”

Inside the OpenClaw Security Audit

OpenClaw skills function as modular extensions that expand what AI agents can do.

They allow agents to access local files, interact with external services, execute shell commands, process user inputs, and automate multi-step workflows.

In practice, these skills operate much like plugins in a traditional software ecosystem — but with far deeper access to data and system resources.

Because they serve as reusable building blocks for more than 2.2 million deployed agent instances, a vulnerability in a single widely adopted skill can propagate across thousands of downstream implementations.

This creates a supply chain-style risk embedded directly into AI automation workflows.

Key Findings from the OpenClaw Security Audit

The findings come from the largest public security analysis of the OpenClaw ecosystem to date.

ClawSecure audited more than 2,890 skills drawn from the community-curated awesome-openclaw-skills list and the official openclaw/skills repository.

According to the report, 30.6% of skills (883 total) contain at least one high or critical severity vulnerability, including 1,587 critical and 1,205 high-severity findings.

The audit identified several vulnerability classes associated with agent-based architectures.

These include command injection, data exfiltration, credential harvesting, and prompt injection.

Additionally, 18.7% of audited skills exhibited indicators associated with the ClawHavoc malware campaign, including memory harvesting behaviors and command-and-control callbacks.

Governance Gaps and Missing Permissions Controls

Beyond direct vulnerabilities, the analysis also revealed systemic governance gaps.

An overwhelming 99.3% of skills shipped without a config.json permissions manifest.

In traditional software ecosystems, permission declarations provide transparency into what system access an application requires before installation.

Without a standardized manifest, users may unknowingly grant broad access to files, clipboard data, shell execution, or outbound network connections, reducing their ability to make informed trust decisions.

Why Generic Scanners Miss Agent-Specific Threats

ClawSecure further reported that 40.6% of all identified vulnerabilities were detectable only through ecosystem-specific analysis.

Generic static scanners failed to identify many of these issues.

This detection gap reflects a broader structural challenge, as AI agent ecosystems combine data access, untrusted content processing, tool execution, and persistent memory that traditional scanners were not meant to handle.

The “Sleeper Agent” Code Drift Problem

Compounding these risks is what ClawSecure describes as the “Sleeper Agent” problem.

Within 24 hours of enabling continuous integrity monitoring, the company detected 35 skills with modified code.

Overall, 22.9% of tracked skills recorded at least one hash change after initial scanning.

In practical terms, a skill that appears safe at installation can later receive updates that alter its behavior — potentially introducing new vulnerabilities or malicious functionality — without users being aware.

This highlights the limits of one-time security reviews in dynamic agent ecosystems where code changes are routine.

Reducing AI Agent Attack Surface

As AI agents become embedded in enterprise workflows, security controls must evolve to match how these systems function.

Traditional application safeguards often fall short for architectures that combine automation, data access, and tool execution.

To manage risk effectively, organizations need proactive governance, continuous monitoring, and rigorous validation tailored to agent-based environments.

- Scan skills before installation using security tools designed specifically for AI agent architectures, not just generic malware scanners.

- Enforce least privilege and just-in-time access by restricting agent permissions to only the data, tools, and system capabilities required for defined workflows.

- Require code signing, publisher verification, and formal change management to control updates and reduce the risk of malicious or unauthorized modifications.

- Implement continuous integrity monitoring to detect code drift and automatically re-verify skills after any changes.

- Isolate agents in sandboxed or containerized environments and restrict outbound network access to limit command-and-control callbacks and data exfiltration.

- Enable comprehensive logging and behavioral monitoring to detect anomalous file access, memory harvesting, unusual prompt activity, or unexpected outbound connections.

- Incorporate AI agent workflows into vulnerability management programs and regularly test incident response plans to ensure teams can detect, investigate, and contain agent-related security events.

Together, these measures help limit the blast radius of compromised skills while building long-term resilience into AI agent deployments.

The Supply Chain Risk of AI Agents

The OpenClaw findings highlight an important consideration for the AI ecosystem: as agents gain broader access to enterprise systems, security controls need to evolve alongside them.

Vulnerabilities at the skill layer can propagate across multiple downstream workflows, especially when widely adopted components are reused at scale.

As adoption grows, consistent oversight and resilient controls will be key to balancing automation benefits with responsible security practices.

Organizations that apply software supply chain principles — such as validation, monitoring, and governance — to agent skills will be better equipped to manage these risks.