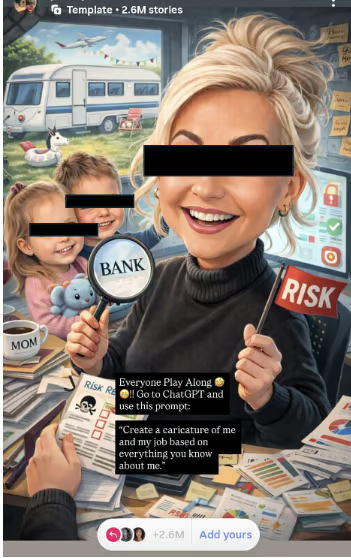

A viral Instagram and LinkedIn trend is turning harmless fun into a potential security headache.

Millions of users are prompting ChatGPT to “create a caricature of me and my job based on everything you know about me,” then posting the results publicly — inadvertently signaling how they use AI at work and what access they might have to sensitive data.

“While many have been discussing the privacy risks of people following the ChatGPT caricature trend, the prompt reveals something else alarming — people are talking to their LLMs about work,” said Josh Davies, principal market strategist at Fortra in an email to eSecurityPlanet.

He added, “If they are not using a sanctioned ChatGPT instance, they may be inputting sensitive work information into a public LLM. Those who publicly share these images may be putting a target on their back for social engineering attempts and malicious actors have millions of entries to select attractive targets from.”

Josh explained, “If an attacker is able to take over the LLM account, potentially using the detailed information included in the image for a targeted social engineering attack, then they could view the prompt history and search for sensitive information shared with the LLM.”

He also added, “This trend doesn’t just highlight a privacy risk, but also the risk of shadow AI and data leakage in prompts – where organizations lose control of their sensitive data via employees irresponsibly using AI.”

How AI Trends Expose Enterprise Data

The OWASP LLM Top Ten lists Sensitive Information Disclosure (LLM2025:02) as one of the top risks associated with LLMs.

This risk extends beyond accidental oversharing — it encompasses any scenario in which sensitive data entered into an LLM becomes accessible to unauthorized parties.

Against that backdrop, the AI caricature trend is more than harmless social media entertainment.

It serves as a visible indicator of a broader shadow AI challenge: employees using public AI platforms without formal governance, oversight, or technical controls.

It also demonstrates how easily threat actors can identify individuals who are likely integrating LLMs into their daily workflows.

How the AI Caricature Trend Expands the Attack Surface

Many of the posted caricatures clearly depict the user’s profession — banker, engineer, HR manager, developer, healthcare provider.

While job titles themselves are often publicly available through professional networking sites, participation in this trend adds a new layer of context.

By generating and sharing these images, users effectively confirm that they rely on a specific public LLM platform for work-related activities. That confirmation is valuable intelligence for an adversary conducting reconnaissance.

The scale amplifies the risk. At the time of writing, millions of images have been shared, many from public accounts, creating a searchable dataset of professionals who likely use public AI systems.

For attackers, this lowers the barrier to building targeted phishing lists focused on high-value roles with probable access to sensitive information.

Security teams evaluating this trend should view it through the lens of shadow AI and AI governance.

Unapproved or unmanaged AI usage expands the organization’s attack surface, often without visibility from security operations teams.

The caricature itself is not the vulnerability; rather, it signals that potentially sensitive prompts may have been submitted to an external AI service outside enterprise control.

The Two Primary Threat Paths

From a threat modeling perspective, two primary attack paths emerge: account takeover and sensitive data extraction through manipulation.

The more immediate risk is LLM account compromise.

A public Instagram post provides a username, profile information, and often clues about the individual’s employer and responsibilities.

Using basic open-source intelligence techniques, attackers can frequently correlate this data with an email address.

If that same email address is used to register for the LLM platform, targeted phishing or credential harvesting attacks become significantly more effective.

Once an attacker gains access to the LLM account, the impact can escalate quickly.

Prompt histories may contain customer data, internal communications, financial projections, proprietary source code, or strategic planning discussions.

Because LLM interfaces allow users to search, summarize, and reference past conversations, an attacker with authenticated access can efficiently identify and extract valuable information.

Although providers implement safeguards to prevent cross-user data exposure, prompt histories remain fully accessible to the legitimate — or compromised — account holder.

Prompt Injection and Model Manipulation

The second path involves prompt injection attacks.

Security researchers have demonstrated multiple ways to manipulate model behavior, including persona-based jailbreaks, instruction overrides like “ignore previous instructions,” and payload-splitting techniques that reconstruct malicious prompts within the model’s context window.

In both cases, the underlying issue is not the caricature trend itself.

The real risk lies in what it implies: that sensitive business information may have been entered into unmanaged, public AI environments.

The social media post simply makes that risk more visible — to defenders and adversaries alike.

Practical Steps to Reduce Shadow AI Risk

As generative AI becomes more integrated into everyday workflows, organizations should take a structured and proactive approach to managing associated risks.

- Establish and regularly reinforce a comprehensive AI governance policy that clearly defines acceptable use, data handling requirements, and employee responsibilities.

- Provide a secure, enterprise-managed AI alternative while restricting or monitoring unsanctioned AI applications to reduce shadow AI exposure.

- Deploy data loss prevention and data classification controls to detect, block, or warn against the submission of sensitive information into AI platforms.

- Enforce strong identity and access management practices, including multi-factor authentication, role-based access controls, and monitoring for credential exposure.

- Segment and monitor AI traffic through secure web gateways, browser isolation, or network controls to reduce the risk of data exfiltration and lateral movement.

- Integrate AI-specific scenarios into security awareness programs and regularly test incident response plans through tabletop exercises involving AI-related compromise.

- Continuously monitor for signs of AI account compromise, prompt misuse, or leaked credentials across the open web and dark web.

Effective AI risk management requires more than a single policy or tool; it involves coordinated governance, technical controls, user education, and ongoing monitoring.

Hidden Security Risks of Viral AI Trends

The AI caricature trend may appear lighthearted, but it underscores a larger reality: generative AI is now embedded in professional workflows, often without corresponding security guardrails.

What users share publicly can provide valuable reconnaissance for attackers and expose weaknesses in governance, identity security, and data protection practices.

The issue is not the meme itself, but the broader signal it sends about how and where sensitive information may be handled.

Organizations that pair clear AI policies with technical controls, user awareness, and continuous monitoring will be better positioned to support innovation while maintaining control over their data.

To strengthen these controls, organizations are adopting zero-trust solutions to continuously verify and tightly manage access to AI tools and sensitive data.