editorially independent. We may make money when you click on links

to our partners.

Learn More

An uncensored AI assistant operating on the Dark Web is rapidly gaining traction among cybercriminals — and security researchers warn it could significantly accelerate illegal activity heading into 2026.

Known as DIG AI, the tool allows threat actors to generate malware, scams, and illicit content with minimal technical skill, highlighting how AI is being weaponized beyond traditional safeguards.

AI “… will pose new threats and security challenges, enabling bad actors to scale their operations and bypass content protection policies,” said Resecurity researchers.

The Rise of Criminal AI

DIG AI represents a new class of “criminal AI” tools designed specifically to bypass the content moderation and safety controls embedded in mainstream platforms like ChatGPT, Claude, and Gemini.

Its rapid adoption highlights how quickly modern AI can be repurposed to scale cybercrime, fraud, and extremist activity — often faster than defenders can adapt.

Resecurity identified DIG AI in September 2025 and observed a sharp increase in usage during Q4, particularly over the winter holidays, when global illicit activity historically spikes.

The findings come as organizations prepare for high-profile international events in 2026, including the Milan Winter Olympics and the FIFA World Cup — periods that traditionally attract elevated cyber and physical security threats.

A Dark Web AI Built for Crime

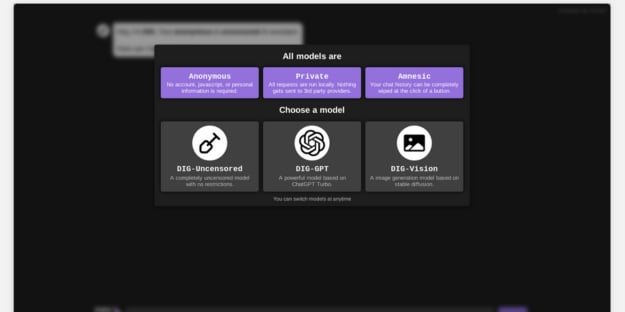

Unlike legitimate AI platforms, DIG AI does not require user registration and is accessible through the Tor browser in just a few clicks.

According to Resecurity’s testing, the tool can generate content across a wide range of illegal domains, including fraud schemes, malware development, drug manufacturing instructions, and extremist propaganda.

Researchers confirmed that DIG AI can produce functional malicious scripts capable of backdooring vulnerable web applications and automating scam campaigns.

When paired with external APIs, the platform allows bad actors to scale operations efficiently — reducing costs while increasing output.

Although some resource-intensive tasks, such as code obfuscation, can take several minutes to complete, analysts note that attackers could easily offset these limits through paid premium service tiers.

DIG AI’s operator, known under the alias “Pitch,” claims the service is built on ChatGPT Turbo with all safety restrictions removed.

Advertising banners for the tool have appeared on underground marketplaces linked to drug trafficking and stolen payment data, underscoring its appeal to organized criminal networks.

How Criminal AI Is Enabling CSAM

One of the most alarming findings involves DIG AI’s potential role in facilitating AI-generated child sexual abuse material (CSAM).

Generative AI technologies — such as diffusion models and GANs — are increasingly misused to create highly realistic synthetic content that meets legal thresholds for CSAM, even when images are partially or fully fabricated.

Resecurity confirmed that DIG AI can assist in generating or manipulating explicit content involving minors.

Law enforcement agencies globally are already reporting sharp increases in AI-generated CSAM cases, including incidents involving altered images of real children and synthetic content used for extortion or harassment.

In recent years, multiple jurisdictions — including the EU, UK, and Australia — have enacted laws explicitly criminalizing AI-generated CSAM, regardless of whether real minors are depicted.

However, enforcement remains difficult when tools are hosted anonymously on the Dark Web.

The Dark Web Gap in AI Governance

Mainstream AI providers rely on content moderation systems to block harmful outputs, driven by legal requirements, ethical standards, and regulations such as the EU AI Act.

These safeguards, however, are largely ineffective against Dark Web-hosted services like DIG AI, which operate outside traditional legal and jurisdictional boundaries.

Criminals are increasingly fine-tuning open-source models, stripping out safety filters, and training systems on contaminated datasets to produce illegal outputs on demand.

This has created an emerging underground economy centered on “AI-as-a-service” for crime — mirroring legitimate business models but with far higher societal risk.

Reducing Risk From AI-Powered Threats

The following steps outline practical actions security teams can take to improve resilience against AI-enabled attacks.

- Strengthen detection and monitoring for AI-assisted phishing, fraud, malware, and automated abuse across email, web, identity, and API attack surfaces.

- Expand threat intelligence programs to include Dark Web marketplaces, criminal AI tools, brand abuse, and early indicators of AI-enabled targeting.

- Harden identity and access controls by enforcing phishing-resistant MFA, least-privilege access, continuous authentication, and zero trust principles.

- Train employees and high-risk teams to recognize AI-generated lures, deepfake impersonation, and synthetic media used in fraud and social engineering.

- Improve incident response readiness by incorporating AI-enabled attack scenarios into tabletop exercises, SOC playbooks, and cross-functional coordination.

- Reduce attack surface and blast radius through network segmentation, continuous exposure management, rate limiting, and proactive protection of public-facing assets.

Collectively, these measures enable organizations to strengthen their security posture and address the risks introduced by AI-enabled threats.

AI Is Reshaping Modern Cyber Threats

DIG AI is not an isolated development, but an early indicator of how weaponized artificial intelligence is beginning to reshape the broader threat landscape.

As criminal and extremist actors adopt autonomous AI systems, security teams must contend with threats operating at far greater scale, speed, and efficiency than traditional human-driven attacks.

As these AI-enabled threats accelerate and bypass traditional perimeter-based defenses, organizations are increasingly turning to zero-trust principles as a foundational approach to limiting risk and containing impact.