Cloudflare has confirmed that a global outage on November 18, 2025, which disrupted access to thousands of websites and services, including X, was caused by a misconfigured internal feature file used by its Bot Management system.

The file exceeded a predefined size limit, crashing core proxy services that route traffic across Cloudflare’s global network.

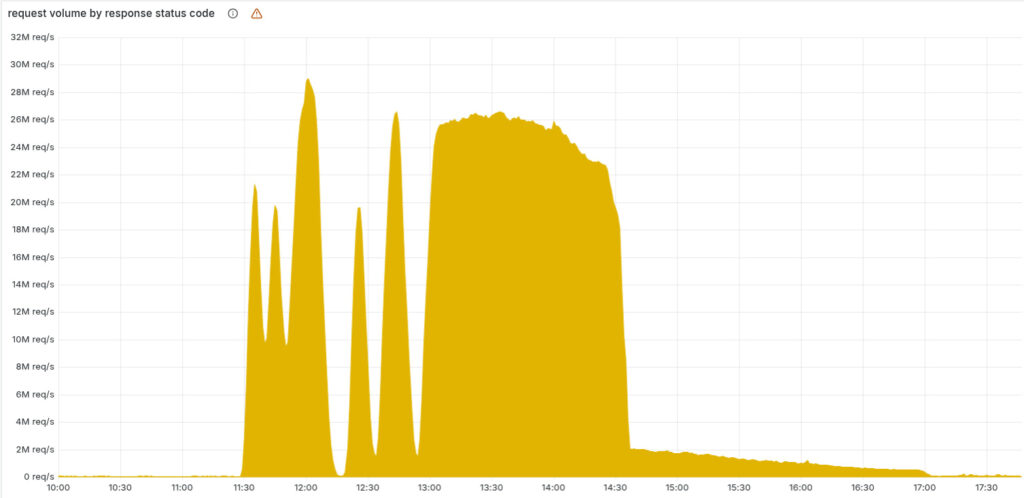

The incident began at 11:20 UTC and persisted for several hours, with HTTP 500-level errors surfacing globally as Cloudflare’s core proxy systems failed to process traffic. According to an in-depth postmortem by Cloudflare CEO Matthew Prince, the disruption was not caused by a cyberattack but was instead triggered by a change in database permissions in the ClickHouse system. This change introduced duplicate data into a machine learning feature file used by the company’s Bot Management module, causing the file to exceed its predefined size limit and crashing the proxy systems responsible for routing traffic.

Cloudflare’s network architecture is built around a central proxy system, “FL” (Frontline), which processes incoming requests and applies customer-specific security, performance, and access-control policies. The bot-detection system, which scores traffic using a machine learning model trained on a dynamic “feature file,” is a key component of this proxy. When the file suddenly ballooned in size, doubling due to improperly filtered metadata, the FL2 proxy engine hit a hardcoded limit of 200 features and panicked, returning HTTP 5xx errors for affected requests.

Cloudflare is a cornerstone of modern web infrastructure, operating one of the largest content delivery networks (CDNs) and providing DDoS protection, application firewalling, and secure access tools to a vast customer base. The company also hosts edge computing platforms like Workers and storage services such as Workers KV, which were also affected during the outage. Cloudflare’s dashboard, used by administrators to manage services, experienced degraded performance and authentication failures due to the knock-on effects of the proxy system failure.

Cloudflare

During the outage, the bot module began crashing intermittently as new versions of the malformed feature file propagated across Cloudflare’s network every 5 minutes. This caused an unusual pattern of recovery and failure, complicating initial diagnostics. Engineers first suspected a large-scale DDoS attack, especially after the company’s independent status page, hosted outside Cloudflare, also went offline due to an unrelated issue.

By 13:05 UTC, Cloudflare had implemented a temporary workaround, bypassing the failing proxy for key services like Workers KV and Access. The true cause was confirmed by 14:24, at which point engineers halted the generation and propagation of the faulty feature file and pushed a known-good configuration across the network. By 14:30, most services had stabilized, and full recovery was achieved by 17:06 UTC.

The impact of the outage was far-reaching:

- Core CDN and security services: End users attempting to access Cloudflare-protected sites encountered HTTP 500 errors.

- Turnstile: The company’s CAPTCHA alternative failed, blocking dashboard logins and other authentication flows.

- Workers KV: Elevated error rates due to dependency on the failing core proxy.

- Cloudflare access: Widespread authentication failures until the bypass was implemented.

- Email security: Temporary degradation in spam detection accuracy due to lost access to IP reputation data.

Cloudflare has labeled this its most severe outage since 2019 and acknowledged significant architectural shortcomings that allowed internally generated data to crash production systems. As a result, the company is undertaking a series of mitigations, including hardening internal configuration ingestion pipelines, introducing global kill switches for critical features, and improving memory error handling to prevent proxy crashes.

This outage is indicative of the fragility of centralized infrastructure in the modern Internet stack and the outsized impact configuration drift can have, even without external interference. While the speed of Cloudflare’s recovery reflects operational maturity, the incident caused massive disruptions worldwide, translating to significant financial impact for affected firms.

If you liked this article, be sure to follow us on X/Twitter and also LinkedIn for more exclusive content.