OpenAI has announced the launch of an “agentic security researcher” that’s powered by its GPT-5 large language model (LLM) and is programmed to emulate a human expert capable of scanning, understanding, and patching code.

Called Aardvark, the artificial intelligence (AI) company said the autonomous agent is designed to help developers and security teams flag and fix security vulnerabilities at scale. It’s currently available in private beta.

“Aardvark continuously analyzes source code repositories to identify vulnerabilities, assess exploitability, prioritize severity, and propose targeted patches,” OpenAI noted.

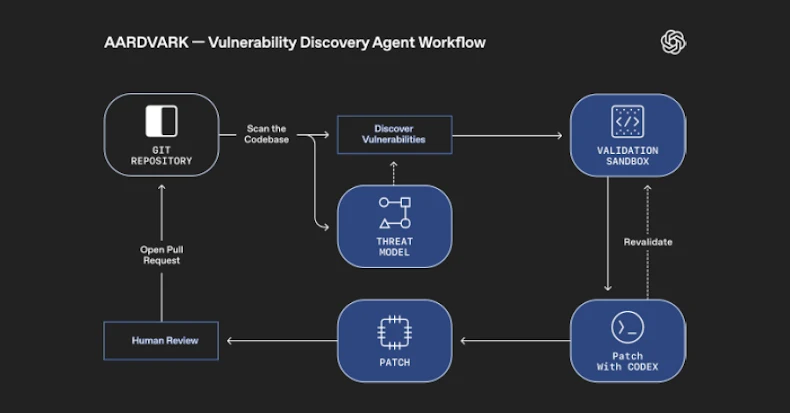

It works by embedding itself into the software development pipeline, monitoring commits and changes to codebases, detecting security issues and how they might be exploited, and proposing fixes to address them using LLM-based reasoning and tool-use.

Powering the agent is GPT‑5, which OpenAI introduced in August 2025. The company describes it as a “smart, efficient model” that features deeper reasoning capabilities, courtesy of GPT‑5 thinking, and a “real‑time router” to decide the right model to use based on conversation type, complexity, and user intent.

Aardvark, OpenAI added, analyses a project’s codebase to produce a threat model that it thinks best represents its security objectives and design. With this contextual foundation, the agent then scans its history to identify existing issues, as well as detect new ones by scrutinizing incoming changes to the repository.

Once a potential security defect is found, it attempts to trigger it in an isolated, sandboxed environment to confirm its exploitability and leverages OpenAI Codex, its coding agent, to produce a patch that can be reviewed by a human analyst.

OpenAI said it’s been running the agent across OpenAI’s internal codebases and some of its external alpha partners, and that it has helped identify at least 10 CVEs in open-source projects.

The AI upstart is far from the only company to trial AI agents to tackle automated vulnerability discovery and patching. Earlier this month, Google announced CodeMender that it said detects, patches, and rewrites vulnerable code to prevent future exploits. The tech giant also noted that it intends to work with maintainers of critical open-source projects to integrate CodeMender-generated patches to help keep projects secure.

Viewed in that light, Aardvark, CodeMender, and XBOW are being positioned as tools for continuous code analysis, exploit validation, and patch generation. It also comes close on the heels of OpenAI’s release of the gpt-oss-safeguard models that are fine-tuned for safety classification tasks.

“Aardvark represents a new defender-first model: an agentic security researcher that partners with teams by delivering continuous protection as code evolves,” OpenAI said. “By catching vulnerabilities early, validating real-world exploitability, and offering clear fixes, Aardvark can strengthen security without slowing innovation. We believe in expanding access to security expertise.”