Artificial Intelligence (AI) has redefined what’s possible in modern cybersecurity. From detecting threats in milliseconds to automating data analysis, AI offers unmatched advantages. But as organizations embrace it, one question grows louder: how is AI an issue?

While AI has strengthened our defenses, it has also created new vulnerabilities. Attackers now exploit the same algorithms designed to protect us. Businesses depend heavily on machine intelligence without fully understanding its limitations or ethical implications.

For cybersecurity specialists, IT leaders, and executives, the challenge is clear—AI is both the shield and the sword. Understanding how AI becomes a security issue is no longer optional; it’s mission-critical.

Why Asking “How Is AI an Issue” Matters

AI isn’t inherently dangerous—it’s how it’s designed, trained, and deployed that makes it risky. AI models depend on massive amounts of data, complex algorithms, and continuous human oversight. When any of these components fail, the consequences can be serious.

Here’s why this question matters today:

-

Attackers use AI to outsmart defenses. Machine learning can be weaponized to identify weaknesses and create realistic phishing or deepfake attacks.

-

AI systems themselves are attack targets. From model poisoning to adversarial inputs, hackers exploit weaknesses in AI models.

-

Businesses overestimate AI’s reliability. Blind trust in AI can result in critical oversights, especially in regulated sectors like healthcare, finance, and government.

Understanding how AI introduces these problems is the first step toward securing your digital environment.

AI Security Risks: The Hidden Dangers Inside Smart Systems

AI systems are vulnerable in ways traditional software isn’t. Their intelligence depends on data and training models, which can be manipulated, corrupted, or biased.

Let’s explore the biggest AI security risks every organization should be aware of.

1. Data Poisoning Attacks

AI learns from data—but what happens when that data is maliciously altered?

Data poisoning occurs when attackers feed false or misleading information into training datasets. Over time, the AI “learns” incorrect behaviors, leading to compromised outputs.

For instance, an AI spam filter could be trained to allow harmful emails through, or a financial model could misclassify fraud patterns.

2. Adversarial Attacks

Adversarial attacks manipulate input data to trick AI into making wrong predictions. A small change in an image, sentence, or dataset could fool even the most advanced AI.

Cybercriminals use these methods to evade detection systems, sneak malware past filters, or impersonate legitimate users.

3. Model Theft and Reverse Engineering

When AI models are exposed through APIs or public access, they can be stolen, replicated, or reverse-engineered. Once copied, attackers can test and manipulate them offline before launching attacks.

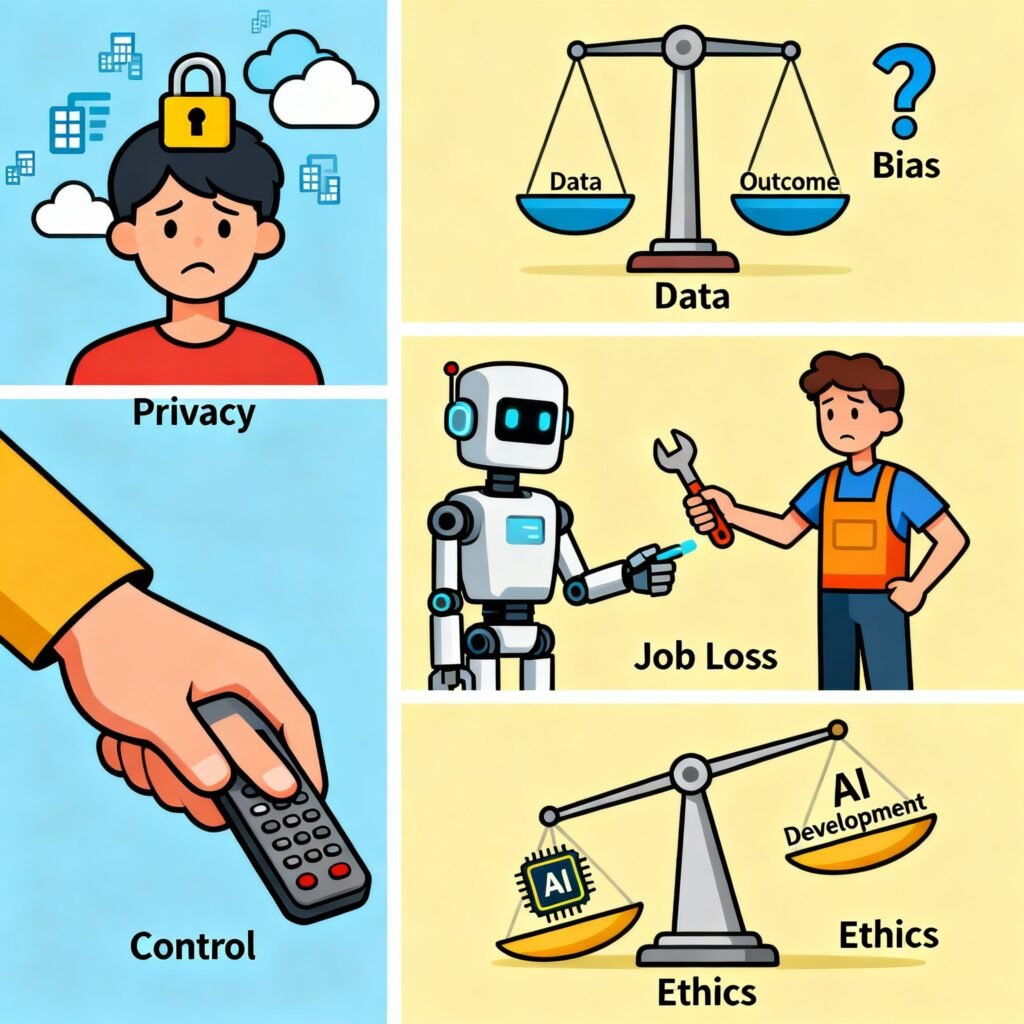

4. Privacy and Data Exposure

AI systems handle vast datasets—often containing personal or proprietary information. Poor security practices or misconfigured systems can lead to major data leaks.

5. Over-Reliance on AI

Organizations that assume “AI handles everything” risk falling into complacency. AI is a tool, not an autonomous solution. Without human oversight, errors can go unnoticed, resulting in financial or reputational damage.

AI Threat Surface Expansion: The Growing Cyber Battlefield

As AI becomes integrated into every part of an organization—network security, operations, and customer service—it also expands the threat surface.

1. More Integrations, More Entry Points

AI connects to APIs, IoT devices, and third-party systems. Each connection increases the attack surface. If one integration is weak, the entire ecosystem is vulnerable.

2. Real-Time Decision Risks

AI systems make rapid, automated decisions. If an attacker manipulates data or algorithms, those decisions could lead to catastrophic results—like granting unauthorized access or misidentifying legitimate users as threats.

3. Opaque “Black Box” Decisions

AI algorithms often lack transparency. When AI makes a mistake, understanding why can be difficult. This lack of visibility complicates security audits and forensics.

4. Shadow AI

Employees often use unsanctioned AI tools—like chatbots, code generators, or analytics models—without IT approval. These “shadow AI” tools may violate compliance or leak sensitive data to third-party vendors.

AI Bias in Cybersecurity: When Machines Get It Wrong

Bias isn’t just a social issue—it’s a cybersecurity issue too.

AI bias occurs when models are trained on unbalanced or incomplete data, leading to skewed decisions. This is especially dangerous in cybersecurity contexts where AI determines what constitutes a “threat.”

Examples of AI Bias in Security

-

A fraud detection system trained mostly on Western transactions might fail to detect fraud in Asian markets.

-

A facial-recognition tool could misidentify people from certain demographics, leading to false alerts or discrimination.

Why This Matters

-

Unfair Outcomes: Biased AI can unfairly target or exclude groups.

-

False Security: If the AI misidentifies threats, attackers can slip through.

-

Legal Risks: Biased systems can lead to compliance violations and lawsuits.

How to Fix It

-

Use diverse and representative datasets.

-

Conduct regular bias audits.

-

Combine AI decisioning with human review for critical systems.

AI Misuse by Attackers: The Dark Side of Innovation

AI has become a powerful weapon in the hands of cybercriminals. Understanding how attackers exploit AI is key to defending against them.

1. Automated Phishing Campaigns

AI can generate convincing phishing emails that mimic tone, writing style, and even sender identity. Attackers use natural language models to personalize attacks at scale.

2. Deepfakes and Voice Cloning

AI-generated deepfakes can impersonate executives, celebrities, or politicians. These have been used for fraud, extortion, and disinformation campaigns.

3. AI-Driven Malware

AI helps attackers design adaptive malware that evolves to evade detection. These self-learning threats can modify behavior based on a network’s defenses.

4. Reconnaissance and Exploitation

Machine learning algorithms analyze vulnerabilities faster than any human hacker could. Attackers can identify weak configurations, outdated systems, or exploitable flaws in minutes.

5. Prompt Injection Attacks

In generative AI systems, prompt injection attacks trick models into revealing secrets or executing harmful commands. This emerging threat shows how attackers manipulate language models for exploitation.

Managing AI Security: A Practical Framework

Knowing that AI introduces risk isn’t enough—you need a plan. Here’s a practical framework to manage and mitigate AI-related security issues.

1. Establish AI Governance

Define who owns AI risk. Create policies for model development, deployment, and monitoring. Governance should include cybersecurity, compliance, and executive oversight.

2. Build a Comprehensive AI Inventory

Maintain a register of all AI systems in use—both internal and third-party. Include details on datasets, model types, and integrations.

3. Assess AI-Specific Threats

Perform risk assessments tailored to AI. Identify vulnerabilities like data poisoning, model drift, or privacy exposure.

4. Secure the AI Lifecycle

-

Use secure data sources and verify training datasets.

-

Encrypt data in transit and at rest.

-

Implement version control for models.

-

Conduct adversarial testing to find weaknesses.

5. Monitor and Audit Continuously

Track model behavior over time. Unusual outputs, drift, or false results can signal compromise. Log AI decisions and review them regularly.

6. Foster Transparency and Explainability

Avoid “black box” AI. Use interpretable models that can explain their decision paths—critical for audits, compliance, and incident response.

7. Educate Teams on AI Risks

Train employees to recognize AI-driven threats like phishing and deepfakes. Awareness is still the strongest defense against manipulation.

Creating an AI-Safe Culture

Technology alone can’t secure AI—you need people, process, and culture.

-

Cross-Department Collaboration: AI security isn’t just IT’s job. Legal, HR, and compliance must be involved.

-

Ethical AI Practices: Build models aligned with your company’s values and ethical guidelines.

-

Incident Readiness: Prepare response plans specifically for AI failures or misuse.

-

Continuous Learning: As AI evolves, so should your security posture.

Key Metrics to Measure AI Risk Management

To maintain visibility and accountability, monitor these metrics:

-

Number of AI systems under governance.

-

Frequency of AI audits or bias tests.

-

Detection time for anomalies in AI behavior.

-

Volume of AI-related incidents.

-

Percentage of AI models with human oversight protocols.

These metrics help executives track AI’s impact and security posture over time.

Turning Risk into Advantage

AI doesn’t have to be a liability. When managed effectively, it strengthens your cyber defenses and operational efficiency. The key lies in understanding how AI becomes an issue—then designing systems to control, monitor, and govern it.

For business leaders, this means asking:

-

Are our AI systems transparent and accountable?

-

Do we have cross-team oversight on AI risk?

-

Can we explain AI decisions to regulators or customers?

When those questions have confident answers, AI becomes an asset, not a threat.

Conclusion: Managing AI Risks Before They Manage You

AI’s impact on cybersecurity is both revolutionary and risky. The same technologies defending your systems can, if mismanaged, turn against you.

By focusing on governance, transparency, and proactive monitoring, organizations can turn the question—how is AI an issue?—into a roadmap for safer innovation.

The time to act is now. Conduct your first AI risk assessment, update your security framework, and make AI accountability part of your leadership agenda.

Call-to-Action:

Empower your teams to balance innovation with responsibility. Start today by identifying your top three AI systems and mapping out their risks and dependencies. The future of cybersecurity depends on how we manage AI—not how AI manages us.

FAQ Section

1. Why is AI considered a cybersecurity issue?

Because AI introduces new vulnerabilities like data poisoning, model bias, and misuse by attackers. It also expands the threat surface across integrated systems.

2. What are the biggest AI security risks for businesses?

Data leakage, adversarial attacks, model theft, shadow AI usage, and deepfake-based social engineering are top risks.

3. How can attackers misuse AI?

Attackers use AI for automated phishing, vulnerability scanning, malware generation, and identity impersonation through deepfakes or voice cloning.

4. How can companies prevent AI misuse?

Implement governance frameworks, monitor AI systems continuously, and train staff on responsible AI use and threat awareness.

5. What is AI bias, and how does it affect security?

AI bias leads to inaccurate threat assessments, unfair decision-making, and exploitable weaknesses when attackers understand those patterns.

6. What steps help secure AI systems?

Data validation, encryption, adversarial testing, transparency tools, and continuous monitoring are key.

7. How does AI expand the attack surface?

AI’s integrations across cloud, IoT, and APIs create more entry points for attackers. Every connection introduces potential exposure.

8. Can AI be trusted for cybersecurity?

Yes—but only with strong governance, transparency, and human oversight. AI should enhance, not replace, human judgment.