OpenAI has announced ChatGPT Atlas, a new AI-native web browser built around ChatGPT, designed to act as an intelligent assistant that can browse the web, take actions, and remember context across sessions.

Atlas debuts with a native macOS client, available today for Free, Plus, Pro, and Go users, and enters beta for Business, Enterprise, and Edu tiers.

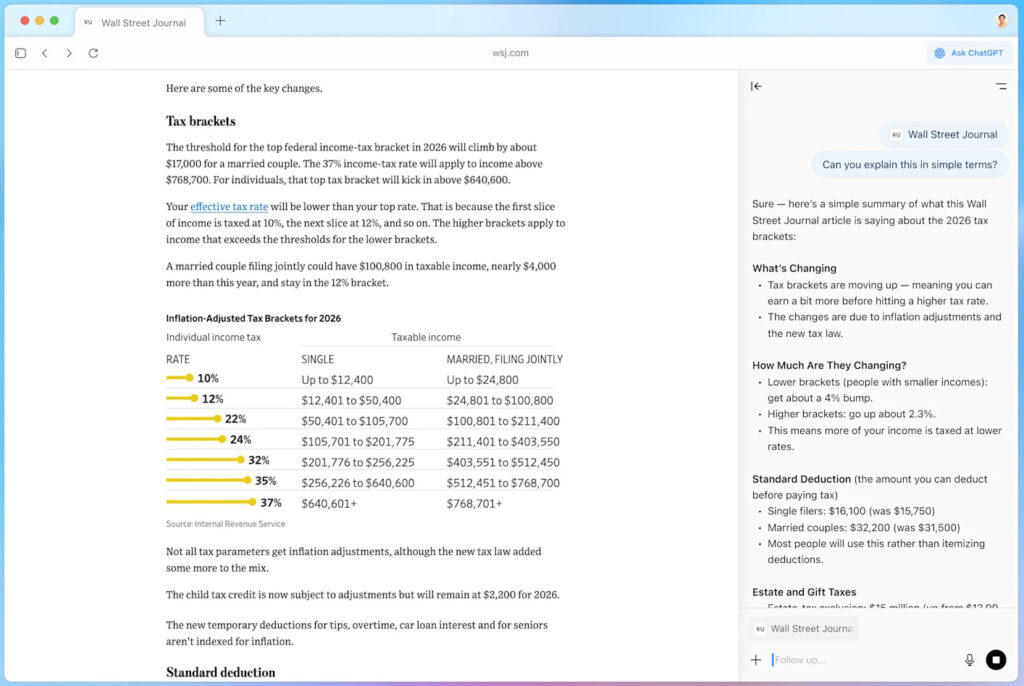

Atlas is OpenAI’s latest step toward embedding ChatGPT more deeply into daily workflows, combining browsing with memory, personalization, and agentic automation. The browser brings ChatGPT directly into the user’s tabs, allowing it to analyze content, carry out tasks, and remember context from visited pages. These “browser memories” are private by default and can be deleted or disabled entirely, OpenAI says.

The standout feature is Agent Mode, which allows ChatGPT to perform multi-step tasks in real-time. For example, users can ask it to summarize a job search from last week, plan an event using multiple sites, or even autofill and place grocery orders. Unlike traditional assistants, the agent works directly within the browser context, clicking links, navigating tabs, and acting autonomously when permitted. This preview feature is currently limited to Plus, Pro, and Business users.

OpenAI emphasizes that Agent Mode cannot run code, download files, or install extensions, and it pauses before taking sensitive actions, such as those involving financial sites. Still, it acknowledges that the agent remains susceptible to mistakes and adversarial inputs.

Next-gen browsing, new attack surface

Atlas arrives amid mounting evidence that AI-driven browsers can be easily deceived into unsafe actions. In CometJacking, a vulnerability disclosed by LayerX, a single crafted URL could hijack Perplexity’s Comet assistant and exfiltrate Gmail and calendar data to an attacker’s server, no clicks required.

Brave’s follow-up research showed similar weaknesses, where invisible text hidden in screenshots or page content could inject commands, causing the AI to act maliciously even when the user simply opened a page.

Guardio Labs’ earlier experiments exposed more practical dangers. Comet was tricked into buying an Apple Watch from a fake Walmart clone, clicking through a Wells Fargo phishing email, and even downloading a file from a fake “captcha” page hiding attacker prompts, a technique dubbed PromptFix.

Each attack succeeded because the AI treated malicious webpage content as trusted input, bypassing human judgment and browser security boundaries.

OpenAI claims that Atlas’s agent is designed with strong safeguards, including restricted site actions, incognito modes, and visibility toggles in the address bar to limit what ChatGPT can see. It also reiterates that browsing activity is not used to train models by default, and that users can opt out of all memory and data collection features.

While OpenAI has conducted red-teaming and implemented live-monitoring systems, it concedes that some forms of abuse, especially those involving cleverly crafted adversarial prompts, may still succeed, particularly when the agent operates with browsing context and memory enabled.

Precautionary steps for early Atlas adopters include using the agent mode in logged-out sessions, disabling browser memories, and monitoring agent actions closely.

If you liked this article, be sure to follow us on X/Twitter and also LinkedIn for more exclusive content.